0. 准备工作

先下载本文所需所有安装包和配置文件到/tmp/目录下

命令如下

cd /tmp

wget https://qiniu.tobehacker.com/bigdata/bigdata_package.zip

unzip bigdata_package.zip

ansible安装和入门使用

(1) 安装和配置ansible

yum install epel-release -y

yum install ansible -y

ansible --version

备注:目前我的ansible版本:2.9.27, 注意ansible版本兼容情况,比如内置变量的写法

(2) ansible主控机 修改/etc/ansible/ansible.cfg配置文件

host_key_checking = False

remote_tmp = /tmp

(3)ansible主控机生成密钥 ,一路回车即可

ssh-keygen -t rsa

(4)配置ansible 远程主机清单

/etc/ansible/hosts文件配置如下

master ansible_host=192.168.18.141 ansible_hostname=master

slave1 ansible_host=192.168.18.142 ansible_hostname=slave1

slave2 ansible_host=192.168.18.143 ansible_hostname=slave2

[bd]

master ansible_host=192.168.18.141 ansible_hostname=master

slave1 ansible_host=192.168.18.142 ansible_hostname=slave1

slave2 ansible_host=192.168.18.143 ansible_hostname=slave2

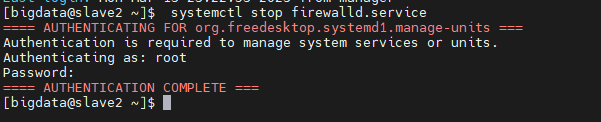

远程主机防火墙配置

放到下面 使用 ansible编排,远程集群主机关闭防火墙 systemctl stop firewalld.service (注意:需要root用户执行)(生产环境请配置防火墙规则,不要直接无脑关闭!!!)

执行如下命令

ansible bd -m shell -a 'systemctl stop firewalld.service' -u -root --ask-pass

配置时间同步服务 阿里云NTP服务配置教程

远程主机创建bigdata用户

本阶段主要任务是 创建bigdata用户组和用户

创建bigdata用户

创建bigdata用户的yml编排如下,adduser.yml里请修改password为自己的密码

- name: 创建用户并设置密码(密码必须是密文)

hosts: bd # ansible-playbook adduser.yml 需要提权操作 获取远程root权限

become: yes

become_method: su

become_user: root

vars_prompt:

- name: "user_name"

prompt: "输入需要创建远程主机的用户名"

private: no

tasks:

- name: "开始创建{{ user_name }}用户"

user:

name: "{{ user_name }}"

createhome: yes

password: "$6$FwuYepy4drnZwbvL$w3ZT32BkwU0v4t86I98gbctoNArBsaTki.nqKdjjbMwKlBJrdqZsrRItI7Kcxx6UoeaN4j0FUABw.EiT.pm5Z/"

#此处必须是加密后的密码(如何生成密文密码,请执行下面操作),在本文中明文密码是123456

# yum -y install python-pip

# pip install passlib

# 使用此命令 生成密码:python -c "from passlib.hash import sha512_crypt; import getpass; print(sha512_crypt.using(rounds=5000).hash(getpass.getpass()))"

执行命令,下面命令指定远程用户为root执行任务,需要输入远程用户root 密码

ansible-playbook adduser.yml -u root --ask-pass

ansible对远程集群主机bigdata用户批量免密

(1) 设置ansible主机用户root对远程集群主机bigdata用户免密登录

ansible_to_other.yml编排如下:

- hosts: bd # ansible主控机实现对其他集群主机批量免密 执行命令 ansible-playbook ansible_to_other.yml -u root --ask-pass

vars_prompt:

- name: "local_name"

prompt: "输入当前主机免密的用户名"

private: no

- name: "remote_user_name"

prompt: "输入远程主机免密的用户名"

private: no

tasks:

- name: ansible主机root用户批量免密配置

authorized_key:

user: "{{ remote_user_name }}"

key: "{{ lookup('file', '/{{ local_name }}/.ssh/id_rsa.pub') }}"

state: present

when:

- local_name == 'root'

- name: ansible主机非root批量免密配置

authorized_key:

user: "{{ remote_user_name }}"

key: "{{ lookup('file', '/home/{{ local_name }}/.ssh/id_rsa.pub') }}"

state: present

when:

- local_name != 'root'

执行如下命令

ansible-playbook ansible_to_other.yml -u root --ask-pass

创建大数据软件目录、数据目录并授权给bigdata用户和组

- name: 创建大数据安装的软件目录、数据目录,且授权给bigdata

hosts: bd # 执行命令: ansible-playbook create_bigdata_dir.yml -u root --ask-pass

vars_prompt:

- name: "owner_name"

prompt: "输入授权所有者的用户名"

private: no

- name: "group_name"

prompt: "输入授权所属组的组名"

private: no

- name: "software_target"

prompt: "输入大数据软件目录,如/opt/bigdata"

private: no

- name: "data_target"

prompt: "输入大数据数据目录,如/data/bigdata"

private: no

- name: "your_mode"

prompt: "输入授予目录权限模式,如0775"

private: no

tasks:

- name: "创建大数据软件目录{{ software_target }}"

file:

path: "{{ software_target }}"

state: directory

owner: root

group: root

mode: '0755'

- name: "创建大数据数据目录{{ data_target }}"

file:

path: "{{ data_target }}"

state: directory

owner: root

group: root

mode: '0755'

- name: "授权软件目录权限:用户{{ owner_name }} 用户组 {{ group_name }} 权限模式 {{ your_mode }} "

file:

path: "{{ software_target }}"

recurse: yes

owner: "{{ owner_name }}"

group: "{{ group_name }}"

mode: "{{ your_mode }}"

- name: "授权数据目录权限:用户{{ owner_name }} 用户组 {{ group_name }} 权限模式 {{ your_mode }} "

file:

path: "{{ data_target }}"

recurse: yes

owner: "{{ owner_name }}"

group: "{{ group_name }}"

mode: "{{ your_mode }}"

执行如下命令

ansible-playbook create_bigdata_dir.yml -u root --ask-pass

配置集群主机之间bigdata用户之间免密登录 hosts_no_password_login.yml

注意此命令执行,在ansible主控机必须切换到root,因为涉及主控端权限操作,最好都在root用户操作

- hosts: bd # 实现被控端主机 之间互相免密 ,执行命令 ansible-playbook hosts_no_password_login.yml -u root --ask-pass

gather_facts: no

vars_prompt:

- name: "user_name"

prompt: "输入远程主机互相免密的用户名"

private: no

vars:

ssh_key_length: 4096

tasks:

- name: 关闭第一次ssh连接的提示

shell: sed -i "s/^.*StrictHostKeyChecking.*$/ StrictHostKeyChecking no/g" /etc/ssh/ssh_config

- name: Root用户创建 SSH key 目录

file:

path: /root/.ssh

state: directory

mode: '0700'

when: user_name == 'root'

- name: Root用户 Generate SSH key pair

command: ssh-keygen -b {{ ssh_key_length }} -f /root/.ssh/id_rsa -N ''

args:

creates: "/root/.ssh/id_rsa"

when: user_name == 'root'

- name: "{{ user_name }}用户创建 SSH key 目录"

file:

path: "/home/{{ user_name }}/.ssh"

state: directory

group: "{{ user_name }}"

owner: "{{ user_name }}"

mode: '0700'

when: user_name != 'root'

- name: "{{ user_name }} , uid: {{ user_id.stdout }}用户生成 SSH key pair"

command: "ssh-keygen -b {{ ssh_key_length }} -f /home/{{ user_name }}/.ssh/id_rsa -N '' "

args:

creates: "/home/{{ user_name }}/.ssh/id_rsa"

when: user_name != 'root'

- name: "设置权限{{ user_name }}用户的ssh目录"

shell: "chown -R {{ user_name }}:{{ user_name }} /home/{{ user_name }}/.ssh/"

when: user_name != 'root'

- name: 删除ansible主控机的 /tmp/ssh/ # 这里需要ansible主控机的root权限,执行此命令最好使用root

file: path=/tmp/ssh/ state=absent

delegate_to: 127.0.0.1 #这里也可以用local_action,效果一样

- name: root用户拷贝公钥到本机

fetch:

src: /root/.ssh/id_rsa.pub

dest: /tmp/ssh/

when:

- user_name == 'root'

- name: "{{ user_name }}用户拷贝公钥到本机"

fetch:

src: "/home/{{user_name}}/.ssh/id_rsa.pub"

dest: /tmp/ssh/

when:

- user_name != 'root'

- name: 将各个公钥合并成一个文件

local_action: shell find /tmp/ssh/* -type f -exec sh -c 'cat {}>>/tmp/ssh/authorized_keys.log' \;

run_once: true

- name: 读取 /tmp/ssh/authorized_keys.log 注册为变量 keys_log

shell: cat /tmp/ssh/authorized_keys.log

delegate_to: 127.0.0.1

register: keys_log

- name: root用户将合成的公钥进行分发

blockinfile:

path: "/root/.ssh/authorized_keys"

block: "{{ keys_log.stdout }}"

backup: yes

insertafter: 'EOF'

mode: 0600

when:

- user_name == 'root'

- name: "{{ user_name }}用户创建 authorized_keys文件"

file:

path: "/home/{{ user_name }}/.ssh/authorized_keys"

state: touch

owner: "{{ user_name }}"

group: "{{ user_name }}"

mode: '0700'

when:

- user_name != 'root'

- name: "{{ user_name }}用户将合成的公钥进行分发"

blockinfile:

path: "/home/{{user_name}}/.ssh/authorized_keys"

block: "{{ keys_log.stdout }}"

backup: yes

insertafter: 'EOF'

owner: "{{ user_name }}"

group: "{{ user_name }}"

mode: 0600

when:

- user_name != 'root'

执行命令

ansible-playbook hosts_no_password_login.yml -u root --ask-pass

1. 集群组件版本和部署环境介绍

| 组件名称 | 版本 | 镜像链接 | 个人链接 |

|---|---|---|---|

| JDK | jdk-8u202 | 链接 | 链接 |

| Zookeeper | 3.5.10 | 链接 | 链接 |

| Hadoop | 3.3.4 | 链接 | 链接 |

| Scala | 2.13.10 | 链接 | 链接 |

| Spark | 3.3.2 | scala2.13对应Spark3.3.2链接 | 链接 |

注意:Scala2.13.10和Spark3.3.2版本兼容情况,可看此处 点我看

环境部署与分布介绍表

进程介绍 (1存在,0不存在)

2. 安装各个组件

2.1 Centos7主机基础配置

(1) 配置集群主机名

修改每台主机的hostname , hostname.yml如下

- hosts: bd # ansible-playbook hostname.yml -u root --ask-pass

tasks:

- name: 修改hostname

shell: "echo {{ ansible_hostname|quote }} > /etc/hostname"

执行如下命令

ansible-palybook hostname.yml -u root --ask-pass

(2) 配置集群主机的/etc/hosts

三台主机的/etc/hosts 配置

hosts.yml如下

- hosts: bd

remote_user: root

tasks:

- name: 配置/etc/hosts

blockinfile:

path: "/etc/hosts"

block: |

192.168.18.141 master

192.168.18.142 slave1

192.168.18.143 slave2

backup: yes

create: yes

执行如下命令

ansible-playbook hosts.yml -u root --ask-pass

jdk安装到每一台主机 TODO bigdata用户不写死

ansible-palybook 的jdk.yml如下:

- hosts: bd # jdk安装 执行命令 ansible-playbook jdk_install.yml -u root --ask-pass

vars:

JDK_DIR: "/opt/bigdata/jdk"

JDK_VERSION: "jdk1.8.0_202"

tasks:

- name: 创建/opt/bigdata/jdk

file:

path: "{{ JDK_DIR }}"

state: directory

owner: bigdata

group: bigdata

mode: 0775

- name: 解压并安装jdk

unarchive:

src: "/tmp/bigdata_package/jdk-8u202-linux-x64.tar.gz"

dest: "{{ JDK_DIR }}"

owner: bigdata

group: bigdata

mode: 0775

- name: 设置环境变量 /etc/profile

lineinfile:

dest: "/etc/profile"

line: "{{ item.value }}"

state: present

with_items:

- { value: "export JAVA_HOME={{ JDK_DIR }}/{{ JDK_VERSION }}" }

- { value: "export JRE_HOME=${JAVA_HOME}/jre" }

- { value: "export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib" }

- { value: "export PATH=$JAVA_HOME/bin:$PATH" }

- name: 设置环境变量 /etc/bashrc

lineinfile:

dest: "/etc/bashrc"

line: "{{ item.value }}"

state: present

with_items:

- { value: "export JAVA_HOME={{ JDK_DIR }}/{{ JDK_VERSION }}" }

- { value: "export JRE_HOME=${JAVA_HOME}/jre" }

- { value: "export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib" }

- { value: "export PATH=$JAVA_HOME/bin:$PATH" }

- name: 生效环境变量

become: true

become_method: su

become_user: root

shell: "source /etc/profile && source /etc/bashrc"

- name: Java版本查看

shell: "java -version"

register: java_version_info

- name: 输出Java版本信息

debug:

msg: "{{ java_version_info }}"

执行命令

ansible-playbook jdk_install.yml -u root --ask-pass

验证当前版本jdk是否支持AES256,为接入Kerberos做铺垫

VerifyAES256ForKerberos.java 文件如下

//在 JDK 8u161 和更高版本中,Java 支持 AES 密钥长度为 256 位,但需要在 jre/lib/security/java.security 文件中配置以下属性:

// jdk.tls.legacyAlgorithms=false

// 测试当前Java是否支持256长度的256 位 AES 密钥长度

// 如果程序输出 "Java environment supports 256-bit AES key length.",则表示ava 环境已经支持 256 位 AES 密钥长度,可以正常使用 Kerberos

import javax.crypto.Cipher;

import javax.crypto.spec.SecretKeySpec;

import java.util.Arrays;

import java.security.SecureRandom;

public class VerifyAES256ForKerberos {

public static void main(String[] args) throws Exception {

byte[] keyBytes = new byte[32];

new SecureRandom().nextBytes(keyBytes);

SecretKeySpec key = new SecretKeySpec(keyBytes, "AES");

Cipher cipher = Cipher.getInstance("AES");

cipher.init(Cipher.ENCRYPT_MODE, key);

byte[] plaintext = "test".getBytes();

byte[] ciphertext = cipher.doFinal(plaintext);

cipher.init(Cipher.DECRYPT_MODE, key);

byte[] decryptedtext = cipher.doFinal(ciphertext);

if (Arrays.equals(plaintext, decryptedtext)) {

System.out.println("Java environment supports 256-bit AES key length.");

} else {

System.out.println("Java environment does not support 256-bit AES key length.");

}

}

}

verify_aes256.yml 编排文件如下

- name: 验证集群主机java是否支AES256,为后续Keyberos支持做铺垫

hosts: bd

become: yes

tasks:

- name: 拷贝java文件到远程/tmp

copy:

src: /tmp/bigdata_package/VerifyAES256ForKerberos.java

dest: /tmp/VerifyAES256ForKerberos.java

- name: javac编译

shell: "cd /tmp && javac -classpath /tmp VerifyAES256ForKerberos.java "

- name: java运行验证

shell: "cd /tmp && java -classpath /tmp VerifyAES256ForKerberos"

register: info

- name: 验证结果

debug:

msg: "{{ info.stdout }}"

执行如下命令

ansible-playbook verify_aes256.yml -u root --ask-pass

支持的结果输出如下:

zk安装

使用ansible-playbook 安装, zk_install.yml编写如下

注意zk.yml里的zoo.cfg配置,请修改为自己的(ip地址后三位),如下图

- hosts: bd # zookeeper 安装 执行命令 ansible-playbook zk_install.yml -u bigdata --ask-become-pass

vars_prompt:

- name: "remote_user"

prompt: "请输入安装的远程用户名"

private: no

- name: "remote_group"

prompt: "请输入安装的远程用户组"

private: no

vars:

ansibleZkDir: /tmp/bigdata_package/apache-zookeeper-3.5.10-bin.tar.gz #主控机 本地安装包路径

remoteZkDir: /opt/bigdata/zookeeper # 远程安装目录

zkVersion: apache-zookeeper-3.5.10-bin # zookeeper安装版本 和压缩包名称对应

tasks:

- name: 创建 /opt/bigdata/zookeeper 目录

file:

path: "{{ remoteZkDir }}"

state: directory

group: "{{ remote_group }}"

owner: "{{ remote_user }}"

mode: 0775

recurse: yes

- name: 创建 zk数据根目录

file:

path: "/data/bigdata/{{ zkVersion }}"

state: directory

group: "{{ remote_group }}"

owner: "{{ remote_user }}"

mode: 0775

recurse: yes

- name: 创建 zk的data 目录

file:

path: "/data/bigdata/{{ zkVersion }}/data"

state: directory

group: "{{ remote_group }}"

owner: "{{ remote_user }}"

mode: 0775

recurse: yes

- name: "复制并解压zookeeper安装包"

unarchive:

src: "{{ ansibleZkDir }}"

dest: "{{ remoteZkDir }}"

group: "{{ remote_group }}"

owner: "{{ remote_user }}"

- name: "配置环境变量/etc/profile"

lineinfile:

dest: "/etc/profile"

line: "{{ item.value }}"

state: present

become: yes

become_method: su

become_user: root

with_items:

- {value: "export ZOOKEEPER_HOME={{ remoteZkDir }}/{{ zkVersion }}"}

- {value: "export PATH=$PATH:$ZOOKEEPER_HOME/bin"}

- name: "配置环境变量/etc/bashrc"

lineinfile:

dest: "/etc/bashrc"

line: "{{ item.value }}"

state: present

become: yes

become_method: su

become_user: root

with_items:

- {value: "export ZOOKEEPER_HOME={{ remoteZkDir }}/{{ zkVersion }}"}

- {value: "export PATH=$PATH:$ZOOKEEPER_HOME/bin"}

- name: 生效环境变量

become: true

become_method: su

become_user: root

shell: "source /etc/profile && source /etc/bashrc"

- name: zk的conf配置

blockinfile:

path: "{{ remoteZkDir }}/{{ zkVersion }}/conf/zoo.cfg"

block: |

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/bigdata/{{ zkVersion }}/data

clientPort=2181

server.141=master:2888:3888

server.142=slave1:2888:3888

server.143=slave2:2888:3888

backup: yes

create: yes

group: "{{ remote_group }}"

owner: "{{ remote_user }}"

mode: 0775

- name: 创建myid文件

file:

path: "/data/bigdata/{{ zkVersion }}/data/myid"

state: touch

group: "{{ remote_group }}"

owner: "{{ remote_user }}"

mode: 0775

- name: 根据主机ip设置对应zk的myid

shell: hostname -i| cut -c 12-15 | sed 's/\.//g' > "/data/bigdata/{{ zkVersion }}/data/myid"

- name: 使用{{ remote_user }}启动zookeeper

become: true

become_method: su

become_user: "{{ remote_user }}"

shell: "{{ remoteZkDir }}/{{ zkVersion }}/bin/zkServer.sh start {{ remoteZkDir }}/{{ zkVersion }}/conf/zoo.cfg"

register: zk_start_info

- debug:

msg: "{{ zk_start_info }}"

执行命令如下

ansible-playbook zk_instal.yml -u bigdata --ask-become-pass

Hadoop部署

Hadoop安装

(1)首先把hadoop几个配置文件放到ansible主控机 /tmp/hadoop/conf

下载地址 : hadoop配置文件

(2)ansible-playbook 编排文件如下

- hosts: bd #命令: ansible-playbook hadoop_install.yml -u bigdata --ask-become-pass

vars_prompt:

- name: "remote_user"

prompt: "请输入安装的远程用户名"

private: no

- name: "remote_group"

prompt: "请输入安装的远程用户组"

private: no

vars:

ansibleHpDir: /tmp/bigdata_package/hadoop-3.3.4.tar.gz

remoteHpDir: /opt/bigdata/hadoop

hpVersion: /hadoop-3.3.4

tasks:

- name: 创建 /opt/bigdata/hadoop 安装目录

file:

path: "{{ remoteHpDir }}"

group: "{{ remote_user }}"

owner: "{{ remote_user }}"

state: directory

recurse: yes

mode: 0775

- name: 创建hadoop运行时相关目录

file:

path: "{{item}}"

group: "{{ remote_user }}"

owner: "{{ remote_user }}"

state: directory

recurse: yes

mode: 0775

with_items:

- /opt/bigdata/hadoop

- /data/bigdata/hadoop/tmp

- /data/bigdata/hadoop/journal

- /data/bigdata/hadoop/dfs/name

- /data/bigdata/hadoop/dfs/data

- name: "复制并解压hadoop安装包"

unarchive:

remote_src: no

src: "{{ ansibleHpDir }}"

dest: "{{ remoteHpDir }}"

group: "{{ remote_user }}"

owner: "{{ remote_user }}"

mode: 0775

- name: 配置文件复制到远程节点

copy:

src: /tmp/bigdata_package/conf/

dest: "/opt/bigdata/hadoop/{{ hpVersion }}/etc/hadoop"

group: "{{ remote_user }}"

owner: "{{ remote_user }}"

mode: 0775

backup: yes

- name: "配置环境变量/etc/profile"

lineinfile:

dest: "/etc/profile"

line: "{{ item.value }}"

state: present

become: yes

become_method: su

become_user: root

with_items:

- {value: "export HADOOP_HOME={{ remoteHpDir }}/{{ hpVersion }}"}

- {value: "export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin"}

- name: "配置环境变量/etc/bashrc"

lineinfile:

dest: "/etc/bashrc"

line: "{{ item.value }}"

state: present

become: yes

become_method: su

become_user: root

with_items:

- {value: "export HADOOP_HOME={{ remoteHpDir }}/{{ hpVersion }}"}

- {value: "export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin"}

- name: 生效环境变量

become: true

become_method: su

become_user: root

shell: "source /etc/profile && source /etc/bashrc"

安装命令如下

ansible-palybook hadoop_install.yml -u bigdata --ask-become-pass

Hadoop启动

三个节点都指向执行,启动journalnode

hdfs --daemon start journalnode

master 的 namenode格式化

hdfs namenode -format

启动 master 的namenode

hdfs --daemon start namenode

开始启动slave1的namenode,bootstrapStandby

hdfs namenode -bootstrapStandby

hdfs --daemon start namenode

启动三个节点的datanode

hdfs --daemon start datanode

在 master 上启动 yarn集群

start-yarn.sh

master的nn节点 格式化ZKFC

hdfs zkfc -formatZK

master、slave1节点 启动DFSZKFailoverController 进程

hdfs --daemon start zkfc

3. 访问相关web界面

先在win10电脑配置hosts,如下:

192.168.18.141 master

192.168.18.142 slave1

192.168.18.143 slave2

访问HDFS web界面

访问yarn web界面

点击访问: master节点yarn的web界面

备注: Hadoop3.x常用端口

HDFS NameNode 内部通常端口:8020/9000/9820

HDFS NameNode 对用户的查询端口:9870

Yarn查看任务运行情况:8088

历史服务器:19888

4. 测试

测试 HDFS 上传文件

结果ok

验证 MapReduce 的 wordcount 案例

https://www.cnblogs.com/jpcflyer/p/8934601.html

https://www.cnblogs.com/jpcflyer/p/9005222.html

评论区